Introduction¶

Fabian Hassler from RWTH Aachen will present the topological invariants

Applications of topological invariants¶

Throughout the course we computed several times a topological invariant of different systems. When is calculating a topological invariant useful or necessary?

The toy model Hamiltonians that we considered do not really require a complicated calculation set up to figure out whether they are topological or not. However, there are two types of applications where a heavy duty algorithm is important. These are:

Ab-initio band structures calculations

Disordered systems

So, we start with a Hamiltonian of some complicated material, and we want to know if it is topological or not. What’s worse is that we don’t even know in advance if it is gapped or not! If the system is disordered (you’ll learn more about those next week), then in addition we can never simulate a truly infinite system. What we can do is to take chunks of finite size and hope that it behaves correctly like an infinite system once the sample size is large enough.

This Hamiltonian may have many parameters, so it’s a large matrix. For systems with a complicated band structure, is fixed to be the number of orbitals we need to faithfully approximate the Hamiltonian, while in disordered systems we would usually simulate a finite system with linear size , and so , with the dimensionality of the space.

There’s a simple rule which allows us to guess how large a disordered system should be, in order to correctly reproduce the topology of an infinite system.

If the typical decay length of the state at the Fermi level of a disordered system is , then we need to take a sample with to correctly calculate its topological properties.

The reason why this is true is that in a smaller sample the bulk is not ‘insulating enough’.

So now we know that the main problem is that we need to do something with a large matrix.

Computational costs¶

How expensive are the calculations? If you look in the literature, you’ll see wildly differently looking algorithms bundled together with different claims of performance. There is actually a very simple way to figure out, and it comes down to an almost universally correct empirical rule:

Whenever you need to do something with a dense matrix of size in a numerical calculation, the costs of doing it most likely are .

This is true almost no matter what you do: matrix multiplication, inversion, diagonalization, calculation of a determinant, and dozens of other decompositions all cost the same. The specific value of the numerical prefactor does vary.

The things become more complicated when the Hamiltonian becomes sparse, since then one may efficiently use that most entries in the matrix are zero. For example, multiplying two banded matrices has a cost , instead of .

Part of our intuition stays true. So since after diagonalizing a Hamiltonian we obtain an matrix of eigenvectors, typically we still need to perform number of operations.

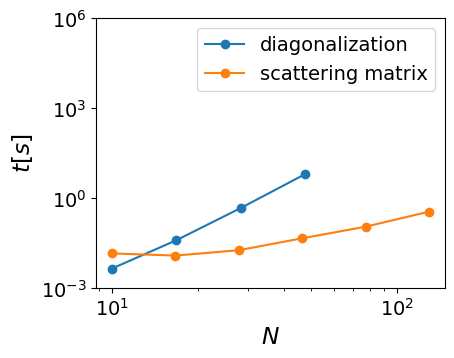

A scattering matrix is smaller than the matrix of all the eigenvectors, and for our sample with a linear size , the size of the scattering matrix is , as opposed to , the size of the Hamiltonian. While this fact isn’t trivial at all, calculation of a scattering matrix still costs the cube of its size, so .

This difference is most pronounced in 2D systems, where the cost of diagonalization results in more than an order of magnitude difference in the system size. On most modern computers diagonalization works up to system sizes of , and scattering matrix calculations work up to system sizes of . This can be best seen over here (but you can also test for yourself):

Source

def two_terminal(L, W):

t = 1.0

def shape(pos):

(x, y) = pos

return 0 <= y < W and 0 <= x < L

def lead_shape(pos):

(x, y) = pos

return 0 <= y < W

lat = kwant.lattice.square(norbs=1)

syst = kwant.Builder()

# definition of system

syst[lat.shape(shape, (0, 0))] = 3 * t

syst[kwant.HoppingKind((1, 0), lat)] = -t

syst[kwant.HoppingKind((0, 1), lat)] = -t

# definition of leads

lead = kwant.Builder(kwant.TranslationalSymmetry((-1, 0)))

lead[lat.shape(lead_shape, (0, 0))] = 3 * t

lead[kwant.HoppingKind((1, 0), lat)] = -t

lead[kwant.HoppingKind((0, 1), lat)] = -t

# attaching leads

syst.attach_lead(lead)

syst.attach_lead(lead.reversed())

return syst.finalized()

def diag_time(N):

syst = two_terminal(N, N)

start = time()

ham = syst.hamiltonian_submatrix()

ev, evec = sla.eigh(ham)

res = time() - start

return res

def smat_time(N):

syst = two_terminal(N, N)

start = time()

kwant.smatrix(syst)

res = time() - start

return res

Ns_diag = np.logspace(1, np.log10(80), 5)[:4]

diag_times = [diag_time(N) for N in Ns_diag]

Ns_smat = np.logspace(1, 3, 10)[:6]

smat_times = [smat_time(N) for N in Ns_smat]Source

plt.plot(Ns_diag, diag_times, "-o", label="diagonalization")

plt.plot(Ns_smat, smat_times, "-o", label="scattering matrix")

plt.xscale("log")

plt.yscale("log")

plt.xlabel("$N$")

plt.ylabel("$t [s]$")

plt.ylim(10**-3, 10**6)

plt.yticks([1e-3, 1, 1e3, 1e6])

plt.legend()

plt.show()

Momentum integration¶

As you saw, unless we’re lucky, a topological invariant is a -dimensional integral of something like winding number density or Berry curvature. If we deal with the scattering matrix, the Brillouin zone is once again reduced by one dimension, and the integral becomes -dimensional.

How many points in momentum space do we need? If we are integrating Berry curvature, we want the uncertainty of the integral to be , so that we can round it and get the correct answer. That means that if there’s a Dirac point with a small gap, we need to resolve it in momentum space, and the number of points should scale as .

If we consider a disordered system, we must consider a finite system of a large enough size , so that every point on the Brillouin zone is representative, and we just need a single point to effectively estimate the value of the integral (you’ll see later how to do this in one of the techniques).

So let’s take a system with orbitals in a unit cell, and edge state decay length . The cost of computing the topological invariant is:

for disordered systems using the full spectrum.

for band structures using the full spectrum.

for disordered systems using scattering approach.

for band structures using scattering approach.

A special thing about 1D¶

There is a special feature of topological invariants in 1D, that dramatically speeds up a calculation. It’s a computational trick that is so elegant and universal that we’d like to share it.

You might remember studying the SSH chain back in week one. This is a similar system to the Majorana chain, but it is characterized by chiral symmetry rather than particle-hole symmetry.

A 1D Bloch Hamiltonian with chiral symmetry has the form

with a matrix containing the onsite term , the left hopping and the right hopping .

If you look up the table, this Hamiltonian is characterized by a topological invariant. This invariant is the winding number that Fabian Hassler discussed in the introductory video. Let’s again describe its meaning.

We need to find an integer quantity which can only change when the gap of closes. Now, can only have a zero eigenvalue if does, that is if . For a gapped Hamiltonian, will in general be a complex number other than zero for all values of .

Let us now try to picture the path that takes in the complex plane as is varied from 0 to . This path must be closed, because must be equal to , and avoid the origin. We may therefore ask: how many times does the path go around the origin? This number must be integer, and it cannot change unless we make the path go through zero. It is our topological invariant, because we can distinguish a clockwise path from a counterclockwise path.

The mathematical expression for the winding number is more obscure than its meaning:

but it just counts how much the phase of increases as goes through the Brillouin zone.

How to take this integral? The trick is to use analytic continuation to a complex plane.

As varies from 0 to , goes around the unit circle in the complex plane. Let’s now make a substitution , with a complex number. The determinant becomes a polynomial function in , . We now need to compute the following integral on the unit circle

We can now use a nice result from complex analysis, the argument principle. It tells us that each zero of inside the unit circle contributes +1 to the value of , and each pole contributes -1. Hence, by counting the number of zeros and poles of inside the unit circle we immediately get the winding number. So, we have reduced the calculation of the topological invariant to finding zeros and poles of the expression . The poles are trivial to find, and they are all located at .

To find the zeros we notice that we need to solve a problem

This is a polynomial eigenvalue problem, and it is trivially mapped onto a standard eigenvalue problem.

So by finding all the eigenvalues we get all the zeros of inside the unit circle, and immediately obtain the 1D topological invariant:

Source

def random_sys(N=4):

onsite = randn(N, N) + 1j * randn(N, N)

lefthopping = randn(N, N) + 1j * randn(N, N)

righthopping = randn(N, N) + 1j * randn(N, N)

return onsite, lefthopping, righthopping

def find_singularities(onsite, lefthopping, righthopping):

# We will have a generalized eigenvalue problem. The two matrices alone do not have much of a physical meaning,

# so their names are also meaningless: A, B.

n = len(onsite)

A = np.block([[-righthopping, np.zeros((n, n))], [np.zeros((n, n)), np.eye(n)]])

B = np.block([[onsite, lefthopping], [np.eye(n), np.zeros((n, n))]])

return scipy.linalg.eigvals(A, B)

def winding_plot(onsite, lefthopping, righthopping):

singularities = find_singularities(onsite, lefthopping, righthopping)

winding = sum(abs(singularities) < 1) - len(onsite)

circle = np.exp(1j * np.linspace(-np.pi, np.pi, 30))

title = f"Winding number: ${winding}$"

fig = go.Figure()

fig.add_trace(

go.Scatter(

x=circle.real,

y=circle.imag,

mode="lines",

line=dict(color="black", dash="dash"),

showlegend=False,

)

)

fig.add_trace(

go.Scatter(

x=singularities.real,

y=singularities.imag,

mode="markers",

marker=dict(color="red", size=8),

name="zeros",

)

)

fig.add_trace(

go.Scatter(

x=[0],

y=[0],

mode="markers",

marker=dict(color="blue", size=8),

showlegend=False,

)

)

fig.update_layout(

title=title,

xaxis=dict(

title=r"$\operatorname{Re}(z)$",

range=[-2, 2],

scaleanchor="y",

scaleratio=1,

),

yaxis=dict(title=r"$\operatorname{Im}(z)$", range=[-2, 2]),

showlegend=True,

)

return fig

np.random.seed(30)

onsite_mat, lefthopping, righthopping = random_sys()

def winding_scale(scale):

return winding_plot(

4 * onsite_mat * 1.2 ** -abs(scale),

lefthopping * 1.2**scale,

righthopping * 1.2**-scale,

)

slider_plot({scale: winding_scale(scale) for scale in range(-10, 10)}, label="α")In the graph above, we see the zeros (red) and poles (blue) of for some randomly generated system, while we tune a parameter that gradually changes the topology of the system.

The approach of analytic continuation onto a complex plane works whenever we have only one momentum variable, so for any 1D system, or a 2D system where we compute the scattering, and allows to calculate the integral exactly and in one step.

Real space methods¶

There is also a broad class of algorithms that rely on the real space structure of the system, and on the inability to deform the wave functions of the filled state into completely localized orbitals.

The intuition behind these is coming from non-commutative geometry, and so it is very hard to explain intuitively. Let us try to illustrate the logic behind those.

The real space invariants are known for many different symmetry classes, but for concreteness let’s see how they work for the Chern insulators (and accordingly how we can compute the Chern number).

First, let’s make a finite but large system in a way that it is fully gapped. Of course if it’s topologically non-trivial, we need to remove the edge states, and we’ll do this by applying periodic boundary conditions in both - and -directions.

We can now define periodic and coordinates , . We can make continuous variables out of these by studying phase factors , and .

The rough idea is as follows. All the filled states in a gapped material can be made localized, but if the system is topological this localization of the states is never exact (since fully localized states would correspond to a trivial Hamiltonian).

Fully localized states would have their and coordinates commuting, so we could try to check if we can approximate and coordinates of the filled states with commuting matrices.

The and phases of the filled states are operators, and we can calculate them by projecting them onto the space of filled states:

We now need to determine if and approximately commute. Let’s consider the expression

First of all, is always an integer, because and the , so it is real.

If and commute, . It can only change if one of the eigenvalues of goes through 0 on a complex plane, which signals appearance of a delocalized mode.

So the Bott index looks like a Chern number, behaves like a Chern number, and it indeed is a Chern number.

To illustrate its behavior let’s plot the cumulative sum of the eigenvalues of , taking a disordered Chern insulator as a sample system:

Source

def onsite(site, t, mu):

return (4 * t - mu) * pauli.sz

def hopx(site1, site2, t, delta):

return -t * pauli.sz + 1j * delta * pauli.sx

def hopy(site1, site2, t, delta):

return -t * pauli.sz - 1j * delta * pauli.sy

# Construct the system

W = L = 12

lat = kwant.lattice.square(norbs=2)

chern_torus = kwant.Builder(kwant.TranslationalSymmetry((L, 0), (0, W)))

chern_torus[lat.shape((lambda site: True), (0, 0))] = onsite

chern_torus[kwant.HoppingKind((1, 0), lat)] = hopx

chern_torus[kwant.HoppingKind((0, 1), lat)] = hopy

chern_torus = kwant.wraparound.wraparound(chern_torus).finalized()

# Define phase operators

x, y = np.array([i.pos for i in chern_torus.sites]).T

# Double all entries to take orbitals into account.

x = np.resize(x, (2, len(x))).T.flatten()

y = np.resize(y, (2, len(y))).T.flatten()

x *= 2 * np.pi / (np.max(x) + 1)

y *= 2 * np.pi / (np.max(y) + 1)

op_x = np.diag(np.exp(1j * x))

op_y = np.diag(np.exp(1j * y))

def evaluate_m(syst, p, energy=0):

ham = syst.hamiltonian_submatrix(params=p)

energies, states = np.linalg.eigh(ham)

projector = states[:, energies < energy]

p_x = projector.T.conj() @ op_x @ projector

p_y = projector.T.conj() @ op_y @ projector

temp = p_x @ p_y @ p_x.T.conj() @ p_y.T.conj()

eigs = np.linalg.eigvals(temp)

# get rid of the four zero eigenvalues

res = np.sum(np.log(eigs))

res = res.imag / (2 * np.pi)

# This calculation is only correct to numerical accuracy.

# When the Chern number is zero the result might be a very small negative number.

# We make them positive so that the out put is never -0, because that does not look nice.

if abs(res) < 0.5:

res = abs(res)

xs = np.cumsum(np.log(eigs)).real

ys = np.cumsum(np.log(eigs)).imag

x = np.append([0], xs)

y = np.append([0], ys)

title = f"$m={p['mu']:.2}$, Chern number $={res:1.0f}$"

window_widening = (max(x) - min(x)) * 0.05

fig = go.Figure()

fig.add_trace(

go.Scatter(

x=x,

y=y,

mode="lines+markers",

line=dict(color="blue"),

marker=dict(size=6),

showlegend=False,

)

)

fig.update_layout(

title=title,

xaxis=dict(

title="", range=[min(x) - window_widening, max(x) + window_widening]

),

yaxis=dict(

title="",

range=[-2 * np.pi - 0.5, 0.5],

tickvals=[-2 * np.pi, 0],

ticktext=[r"$-2\pi$", r"$0$"],

),

)

return fig

p = dict(t=1.0, mu=0.1, delta=0.1, k_x=0, k_y=0)

slider_plot(

{p["mu"]: evaluate_m(chern_torus, p) for p["mu"] in np.linspace(-0.2, 0.4, 16)},

label="m",

)